FLUX.1 Gets ControlNets: On your marks...

- Olivia Cava

- Aug 9, 2024

- 4 min read

Black Forest Labs, the innovative minds behind Stable Diffusion, have recently unveiled FLUX.1—a cutting-edge suite of text-to-image models. Founded by former Stability AI engineers, the team has already made significant waves with this release, promising to democratize AI technology with accessible and highly efficient models. FLUX.1 comes in three delicious flavors—pro, dev, and schnell—each tailored to different needs from professional use to hobbyist experimentation. For more info, check our previous post!

FLUX.1 vs Stable Diffusion

Control & Customization | Model Maturity & Stability | Innovation & Performance |

Stable Diffusion offers mature ControlNets and extensions, providing users with advanced customization and control over the image generation process. This makes it suitable for users who need precise, tailored outputs. | Stable Diffusion is considered more mature, having undergone multiple iterations and improvements, which translates into a stable and reliable platform for various applications. | Stable Diffusion, while possibly slower in some respects, maintains a robust performance across a wider variety of tasks due to its established extensions and control features, making it a go-to for users who prioritize depth and versatility in image generation capabilities. |

FLUX.1 is still developing its feature set to include similar levels of control and customization as Stable Diffusion, indicating that FLUX.1 may not yet fully support the same depth of user-guided modifications. | FLUX.1, while promising and showing rapid progress, still has ground to cover to achieve parity with Stable Diffusion in terms of stability and reliability across a broad range of use cases. | FLUX.1 has introduced innovative features such as rapid generation speeds and high prompt adherence, making it appealing for scenarios where speed and adherence to creative prompts are critical. |

Community contribution: ControlNets for FLUX.1

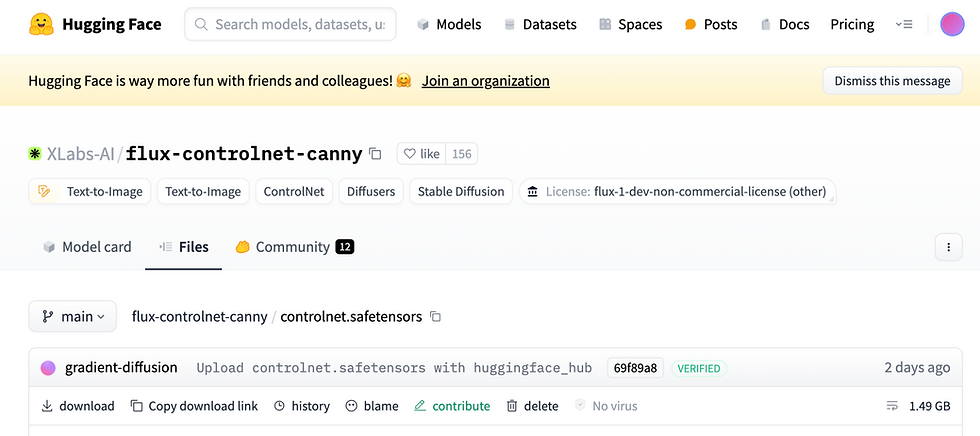

Canny ControlNets for FLUX just released by XLabs-AI! Check it out on Hugging Face.

This is an extremely significant development as it begins to close some serious gaps between FLUX.1 and Stable Diffusion. Across social media, AI experts and hobbyists alike are absolutely floored at how quickly this was released.

"A lot of ground to cover before parity with Stable Diffusion but this is promising to see so quickly ... A lot of customers are asking about FLUX vs SD, but for now advising keeping everyone on SD who need mature ControlNets, extensions, etc." - Bill Cava, Generative Labs, LinkedIn

Understanding ControlNets

If you are unfamiliar, ControlNets serve as an advanced tool allowing users to guide the AI models with higher precision. They allow you to orchestrate where and how certain elements appear in the final image. At their core, ControlNets are additional input layers integrated into generative AI models.

How do they work? Imagine you’re an artist, but instead of a brush, you wield a series of maps and guides:

Semantic Maps | Edge Maps | Pose Maps |

Let you specify what objects appear and where they are placed. It's like drawing a rough sketch of your painting before adding colors. | Define the shapes within your image. This could be compared to outlining forms with a pencil to ensure that each object is well-defined. | Are used to dictate the posture of figures, similar to how a director poses actors on a stage. |

These maps are not drawn manually but are often created using sophisticated tools like Canny edge detection for edges, or OpenPose for human figures, providing the AI models with a clearer blueprint to follow.

Why use ControlNets? Using ControlNets elevates your role from a mere spectator to an active participant in the creative process:

Precision | Creativity | Efficiency |

You get exactly what you envision, as ControlNets reduce the randomness in AI-generated images. | They unlock a higher level of artistic freedom, allowing for complex compositions that would be difficult to achieve otherwise. | By providing detailed guides, ControlNets can significantly cut down on the time and effort needed to reach the desired result, especially for intricate scenes. |

In summary, ControlNets are not just a feature but a revolution in how we interact with generative AI. They don't just make the models smarter; they make the entire creative process more intelligent, giving users unprecedented control over their creations.

Future implications

The integration of ControlNets into FLUX.1 marks a significant stride towards narrowing the technological and functional gap with Stable Diffusion. This development not only enhances FLUX.1's capabilities but also sets the stage for a future where its potential can be fully realized through community-driven enhancements.

Bridging the Technological Gap: By incorporating ControlNets, FLUX.1 is beginning to offer the detailed control and customization that have long been hallmarks of Stable Diffusion. This advancement means that FLUX.1 is not just catching up in terms of features but is also enhancing the user experience and broadening the model's applicability in professional and creative settings.

Empowering the Community: The future of FLUX.1 looks particularly promising with the potential for further community-driven improvements. Given its open-source nature and the initial release of multiple model variants, FLUX.1 provides a versatile foundation for developers, artists, and AI enthusiasts to build upon. Which brings us to our next point...

Call to action: We need YOU!

Okay, here's your homework:

|

|---|

Whether you're a developer, digital artist, or just curious about AI Image Generation, we want you to try out FLUX.1 and let us know how it goes. No matter the outcome, please share your generated images and feedback with us! Your experiences not only help us to better understand the capabilities of FLUX.1, but also have the potential to inspire others in the community.

تعليقات